AML Logo Design Project

Non-designer designs the design of laboratory’s symbol, interestingly.

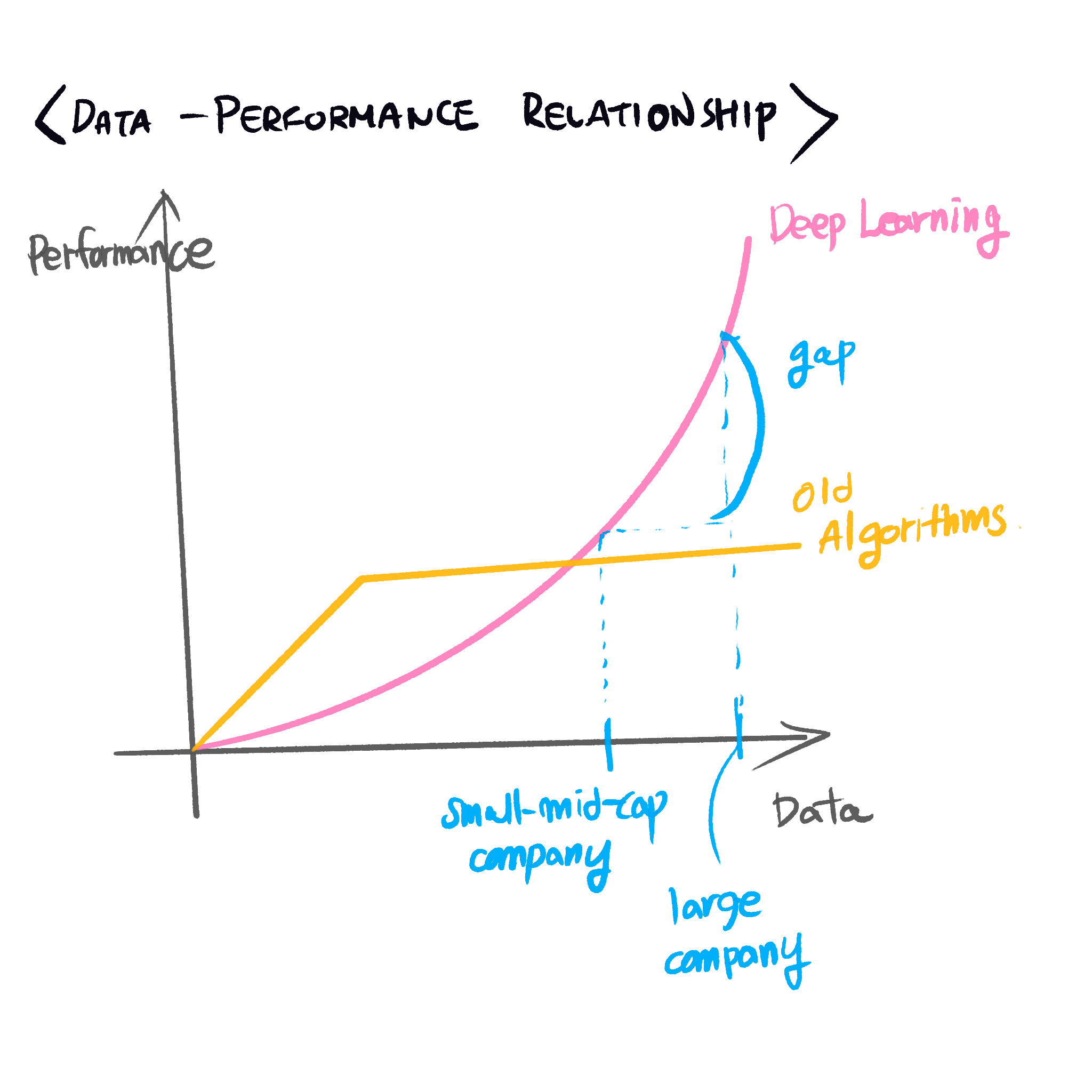

Nowadays, everyone sees that Deep Learning shows state-of-the-art performance and making human life will be very much improved through the technologies. As the importance of good algorithms, the quantity of data is important to companies using it.

© 이 우철

The quantity of data really cares because it enhances the performance. The performance connects to the profit of company so that the gap seems bigger bigger today.

But, how about the other side of data? Is data really help predict unknowns? Isn’t it biased?

Also, how about the algorithm? If the prediction of model decides who to hire and who many of imprisonment years to the murderer, is it totally free from false negative cases? False negative cases will be ‘murder acquitted in the court’ or ‘company fails to offer the job to applicant who is really competent’.

© 이 우철

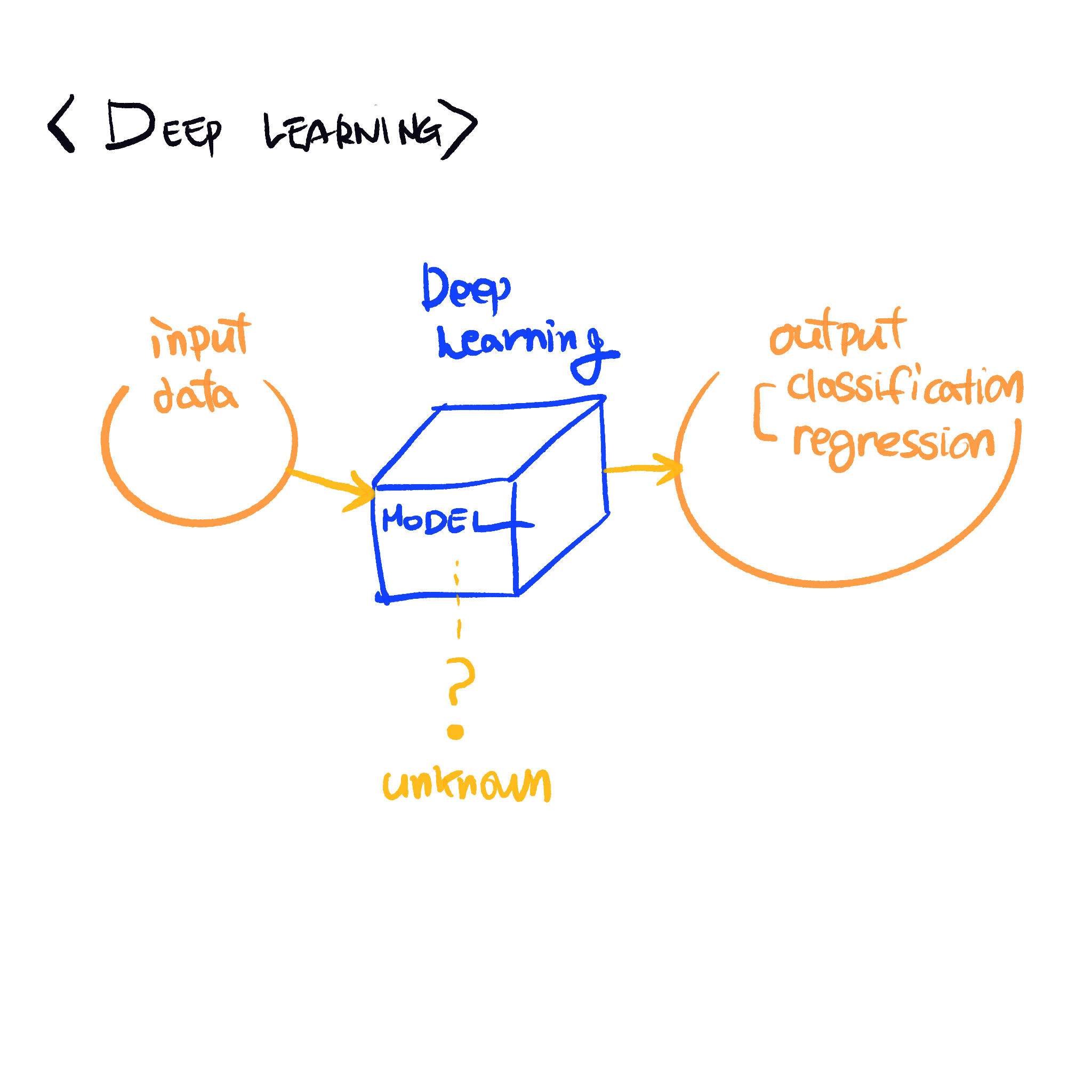

In machine learning, also including deep learning, input data is trained. Trained data which finished learning followed by optimization, validation is set solid as model when it is inferred. When new input data is procured, output(whether it is by ‘classification’ or ‘regression’) comes out. Therefore, simply speaking, model is ‘inductive reasoning algorithm’ which makes broad generalizations from specific observations, from specific to general.

Therefore, to make a good model, to utilize it well, observations, which is input data in the deep learning framework, should not be biased. If input data is biased, model will be biased and the consequence of it will be also unfair and polluted.

© 이 우철

Also, the weakest point of deep learning is, it is literally ‘deep’ so that it is hard to tell what is going on deep in the model. If model shows bias, will human society admit it without any doubt?

The problem of ‘black box of ML Models’ will make human reluctant to use it and believe it unless it makes perfect/ 100% fair output.

Here, FAT in ML, Fairness, Accountability and Transparency in Machine Learning, is becoming more and more important.

According to Microsoft Research, fairness of AI systems is defined as follows:

There are many types of harms (see, e.g., the keynote by K. Crawford at NeurIPS 2017).

Allocation harms : It can occur when AI systems extend or withhold opportunities, resources, or information. Some of the key applications are in hiring, school admissions, and lending.

Quality-of-service harms : It can occur when a system does not work as well for one person as it does for another, even if no opportunities, resources, or information are extended or withheld. Examples include varying accuracy in face recognition, document search, or product recommendation.

With exponential development of maching learning algorithm and the magnititude of data that can feed into learning, ML is approaching to human life with faster pace than ever before

To aid in the decision-making process, data used in learning, visibility, transparency learning algorithms and fairness/interpretability of the outcome is crucial.

Deeper and more various sighted research in Fairness ML will help people to cognitively feel comfortable when accepting AI into their life decisions.

Non-designer designs the design of laboratory’s symbol, interestingly.

How to Use GSDS GPU Server

Fairness Definitions Explained

Fairness ML is the remedy for human’s cognition toward AI

과학자의 자세

미션: Shell 운용하는와중에 구글 검색하느라 시간 버리지 않기

Life Advice by Tim Minchin / 이번생을 조금이나마 의미있게 살고자

TensorflowLite & Coral Arsenal

Hivemapper,a decentralized mapping network that enables monitoring and autonomous navigation without the need for expensive sensors, aircraft, or satellites.

AMP Robotics, AI Robotics Company

자율주행 자동차 스타트업 오로라

Here I explore Aurora, tech company founded by Chris Urmson

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

미국의 AI 스타트업 50곳

2014 mid 맥북프로에 리눅스 얹기

Sparse Encoder, one of the best functioning AutoEncoder

Basic Concept of NN by formulas

싱글뷰 이미지로 다차원뷰를 가지는 3D 객체를 생성하는 모델

고통의 코랄 셋업(맥북)

HTML 무기고(GFM방식)